Easy Access to Cloud-Native Applications in Multi/Hybrid Cloud

To stay ahead of competition, organizations are constantly looking for ways to drive innovation with speed and agility, while maximizing operational and economic efficiency at the same time. To that end, they have been migrating their applications to multi-cloud and hybrid cloud environments for quite some time.

Initially, these applications were moved to the cloud using a “lift-and-shift” approach, retaining their original monolithic architecture. However, such monolithic applications are unable to fully exploit the benefits offered by cloud, such as elasticity and distributed computing, and are also difficult to maintain and scale.

Consequently, as the next evolutionary step, organizations have started to rearchitect their existing applications or develop new ones as cloud-native applications.

A related aspect to deploying cloud-native applications is that of using automation to do so. Organizations deploying such applications have had success in automating the deployment of the underlying infrastructure on which these cloud-native applications run, as well with the initial deployment of these applications. They have, however, struggled with the subsequent steps, such as making these applications accessible to end users, scaling the applications up and down, or moving them from one cloud to another.

The main reason for this is that load balancers, which are used to front-end these applications, and make them accessible to end users, were designed with monolithic applications in mind, and hence are unable to keep pace with the agile manner in which these cloud-native applications are deployed.

These load balancers were designed for a deployment process in which network resources for the applications are provisioned manually by network and security teams, a process that could take days if not weeks, and then manually configured on the load balancer. This process clearly inhibits achieving the goal of automation to which organizations aspire.

Further compounding this problem is the fact that when deploying applications in multi-cloud and hybrid cloud environments, each public cloud provider has its own custom load balancer and management system. For example, AWS has its own Elastic Load Balancing solution, which is different from Microsoft’s Azure Load Balancer. This makes the task of automating application deployment even more complex and time consuming. It also makes the task of applying a consistent set of policies across the different cloud environments more error prone as each load balancer has its own separate configuration.

So, What’s the Solution?

To keep pace with cloud-native applications, one needs an application access solution that enables the load balancer to dynamically manage new cloud-native applications as they are deployed and scaled.

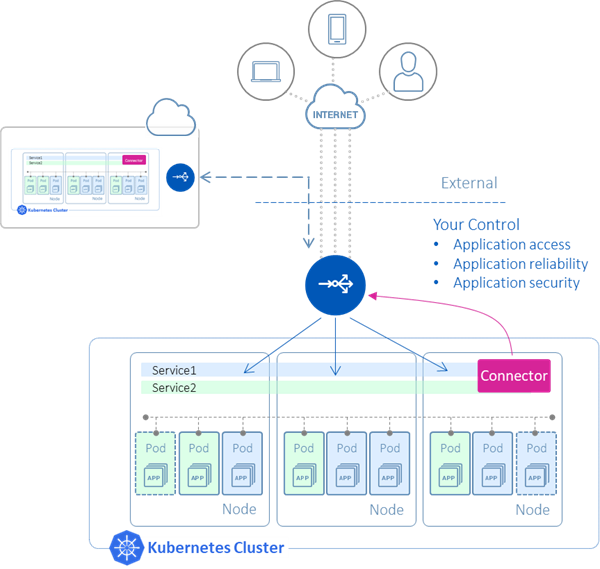

One way to achieve this is by deploying a connector agent that connects the load balancer to the cloud-native applications. Such a connector could monitor the lifecycle of the cloud-native applications, and automatically update the load balancer with information to route traffic to these applications. This would help eliminate the delays associated with the manual process.

This process would work when deployed in a single cloud, but to truly make it work in multi-cloud and hybrid cloud environments, the solution would need to available in different form factors, such as physical, virtual and container, so it can be deployed in both public and private clouds. Having a solution that works consistently across the different cloud environments also provides the associated benefit of being able to apply a consistent set of policies for accessing the application, irrespective of the cloud in which it is running.

Finally, the solution should have deep integration with automation tools such as Terraform, Ansible and Helm, so that the whole application deployment process can be automated.

How A10 Can Help

A10 Networks provides such an application access solution using two entities: Thunder Kubernetes Connector (TKC) and Thunder ADC:

Thunder Kubernetes Connector: The TKC enables automated configuration of the Thunder ADC as a load balancer for a cloud-native application. To do so, it runs as a container alongside the applications.

Thunder ADC: The Thunder ADC is the load balancer that sits in front of the cloud-native applications and makes them accessible to end users. Thunder ADC is available in multiple form factors, such as virtual edition, bare metal, hardware appliance, and containers, and can be deployed in both multi-cloud and hybrid cloud environments. Having the same Thunder ADC solution across the clouds also allows enforcement of a consistent set of policies to access the applications.

Furthermore, A10’s application access solution supports easy integration into existing DevOps processes through its support for third-party automation tools such as Terraform and Ansible.

All this makes the process of deploying cloud-native applications in multi-cloud and hybrid cloud environments automated and much faster. Additionally, with A10’s solution, organizations get a central point of control to apply policies for:

- Security

- Bandwidth rate-limiting

- Application rate-limiting

- HTTP header enrichment

among others. This centralized management also enables faster troubleshooting and root cause analysis, thus ensuring high application availability and thereby high customer satisfaction.

A10 solutions make the application deployment process not only fast, simple and scalable, but also secure and reliable.

How to get started?

To see a demo of the TKC in action, see the A10 webinar on Advanced Application Access for Kubernetes.

To get hands-on with TKC, download the TKC image from Docker repository and follow the steps outlined in the TKC configuration guide.