Selecting the Best ADC Scale-out Solution for Your Environment

Nowadays, with the massive amount of connected devices and dynamic business models, it is essential to have an elastic application delivery system that could easily increase or decrease capacities as needed, either in a cloud environment or on-premises datacenter.

For example, a flash sale might draw an exponential increase traffic to a website for only a given short period of time. Or a favorable mention in social media might make a certain website go viral, resulting in a huge traffic jump. These scenarios can provide to be a challenge in a fairly static infrastructure.

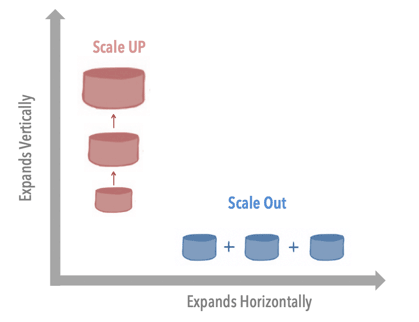

Scale out vs Scale up

There are multiple ways to build an elastic system and primarily these are scaling up and scaling out.

A scale-up approach expands the infrastructure vertically, achieving the goal by adding more resources such as CPU/memory/IO/disks to the existing instance. Some vendors might provide a scale-up solution using a chassis plus blade hardware, while others might use a software license to bump up previous license-capped performance for the same instance.

A scale-out approach expands the infrastructure horizontally, adding new instances next to existing ones. All of these instances, which could be hardware appliances, virtual machines or cloud-based software deployments, now form a cluster and are able to provide more capacity.

The table below provides a quick comparison between these two techniques:

The scale-out approach clearly has technical advantages and is now the default option for creating elastic infrastructures for applications. For example, AWS Auto Scaling is a typical scale-out solution; SAP HANA is another.

The A10 Networks Approach to ADC Scale-out

A10 Networks provides a set of application delivery controller (ADC) scale out features with its Advanced Core Operating System (ACOS). Here are the top-four advantages.

The same application could run concurrently across multiple ADC members (nodes) in a cluster.

This might be the most important key differentiator of the A10 Networks scale-out solution. By enabling multiple ADCs running the same virtual IP / application at the same time, it essentially removes any capacity limit posed by a hardware or virtual instance and allows the admin to improve overall capacity.

Redundancy is achieved inside the cluster with session sync.

While it is crucial to be able to run the same virtual IP /application across multiple devices, it is almost as important to have an internal redundancy mechanism. Admins need to consider what will happen if the total number of nodes in a cluster is either increasing or decreasing, in particular what the impact will be on current user sessions in a node if it goes down. This could either be triggered by a scale-in configuration change or some unpredicted outage. Will this result in users being logged out or disrupted in current ongoing transactions? Most mission-critical applications are not able to afford such failure.

The A10 Networks solution provides session sync among clusters to avoid any application interruption. All the user sessions in a node that goes down are synced to other nodes in the cluster utilizing an internal traffic map algorithm. For example, both user A and user B sessions are now on node one; and user A’s session could be synced between node one and node two while user B’s session could be synced between node one and node three. In the case of node one going down, both users’ sessions could continue without interruption on other nodes in the cluster.

The scale-out solution provides flexibility in assigning different sets of ADC nodes to certain applications.

Admins have the freedom to decide how each application should use the nodes in a cluster. For example, in a cluster that has three nodes, application A could be run simultaneously in all nodes, and the admin could configure application B to only use nodes one and two. By adjusting another parameter, admins could even achieve the same VRRP/HA that would traditionally run on an active/standby pair. For example, application C could run as active on node three and as standby on node two.

The same scale-out approach works, not only with traditional hardware appliances, but also in virtualized or even multi-cloud deployments. Admins have the ability and freedom to migrate from on-premises datacenters to the cloud or vice versa.

Conclusion

When considering the best elastic ADC scale solution, do ask your vendors these four questions:

- Can I run the same application across multiple instances at the same time?

- If so, what happens to my users if one of the instances goes down?

- Do I have the ability to use different sets of instances for different applications? Can I use the same technologies in both hardware in on-premises datacenter, as well as software in private clouds and public clouds?